#82 - Remove Duplicates from Sorted List II

Remove Duplicates from Sorted List II

- Difficulty: Medium

- Topics: Linked List, Two Pointers

- Link: https://leetcode.com/problems/remove-duplicates-from-sorted-list-ii/

Problem Description

Given the head of a sorted linked list, delete all nodes that have duplicate numbers, leaving only distinct numbers from the original list. Return the linked list sorted as well.

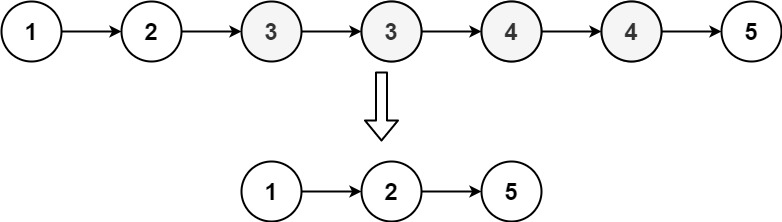

Example 1:

Input: head = [1,2,3,3,4,4,5]

Output: [1,2,5]

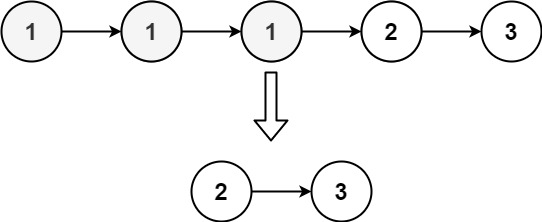

Example 2:

Input: head = [1,1,1,2,3]

Output: [2,3]

Constraints:

- The number of nodes in the list is in the range

[0, 300]. -100 <= Node.val <= 100- The list is guaranteed to be sorted in ascending order.

Solution

1. Problem Deconstruction

Technical Reformulation: Given a singly-linked list where nodes are sorted in non-decreasing order, implement an algorithm that removes all nodes containing values that appear more than once in the list. The output must preserve the original relative order of distinct values while maintaining the sorted property. The algorithm should operate in-place when possible and handle edge cases including empty lists, single-node lists, and all-duplicate scenarios.

Beginner-Friendly Explanation: You have a chain of numbered boxes where each box points to the next one, and the numbers are arranged from smallest to largest. Your task is to remove every box whose number appears more than once anywhere in the chain. After removal, the chain should still be in order from smallest to largest, and only boxes with unique numbers should remain.

Mathematical Formulation: Let L = {v₁ → v₂ → … → vₙ} be a sorted linked list where vᵢ ≤ vᵢ₊₁ for 1 ≤ i < n. Define frequency f(v) = |{j : vⱼ = v}|. Construct L’ = {vᵢ → vⱼ → … → vₖ} where:

- f(vᵢ) = 1 for all nodes in L’

- Relative ordering is preserved: if vₐ appears before v_b in L and both are in L’, then vₐ appears before v_b in L’

- L’ remains sorted: vᵢ ≤ vⱼ ≤ … ≤ vₖ

Constraint Analysis:

- Range [0, 300] nodes: Allows O(n²) solutions but suggests O(n) is optimal. Small enough that recursion is feasible.

- Value range [-100, 100]: Limited domain enables counting approaches but sorted property is more relevant.

- Sorted ascending: Critical property - duplicates are adjacent. Enables single-pass detection.

- Edge cases implied:

- Empty list (0 nodes)

- Single node (always distinct)

- All duplicates → empty result

- No duplicates → original list

- Duplicates at head/tail require special handling

2. Intuition Scaffolding

Real-World Metaphor (Data Cleaning): Like filtering a sorted spreadsheet to remove all entries for customers who made multiple purchases, keeping only one-time buyers while maintaining date order.

Gaming Analogy (Inventory Management): In an RPG with sorted inventory slots, remove all items that aren’t unique legendary items, preserving slot order for the remaining rare items.

Math Analogy (Set Operations): Like performing (L \ D) where D = {x ∈ L : count(x) > 1} while maintaining the original sequence ordering.

Common Pitfalls:

- Head deletion oversight: Forgetting head might be removed

- Consecutive duplicates: Not handling >2 consecutive duplicates

- Pointer confusion: Losing track of prev/next during deletion

- Tail handling: Not properly terminating the final node

- Single-pass fallacy: Trying to delete while detecting in one pass without sentinel

3. Approach Encyclopedia

Approach 1: Brute Force with Frequency Counting

def deleteDuplicates(head):

if not head: return None

# Count frequencies

count = {}

current = head

while current:

count[current.val] = count.get(current.val, 0) + 1

current = current.next

# Find new head (first unique)

dummy = ListNode(0)

dummy.next = head

prev, current = dummy, head

while current:

if count[current.val] > 1:

prev.next = current.next # Skip duplicate

else:

prev = current

current = current.next

return dummy.next

Complexity: Time O(2n) = O(n), Space O(k) where k is unique values (≤201) Visualization:

Original: 1→1→2→3→3→4

Count: {1:2, 2:1, 3:2, 4:1}

Step1: dummy→1(skip)→1(skip)→2(keep)→3(skip)→3(skip)→4(keep)

Result: dummy→2→4

Approach 2: Two-Pointer with Sentinel Node

def deleteDuplicates(head):

dummy = ListNode(0)

dummy.next = head

prev, current = dummy, head

while current:

# Check if current starts duplicate sequence

if current.next and current.val == current.next.val:

# Skip all duplicates

while current.next and current.val == current.next.val:

current = current.next

prev.next = current.next # Skip the entire duplicate sequence

else:

prev = prev.next # Move prev only for unique nodes

current = current.next

return dummy.next

Complexity: Time O(n), Space O(1) Visualization:

Step0: dummy→1→1→2→3→3→4

↑ ↑

prev current

Step1: current sees duplicate, skip to last '1'

dummy→1→1→2→3→3→4

↑ ↑

prev current

Step2: prev.next = current.next (skip both 1s)

dummy→2→3→3→4

↑ ↑

prev current

Approach 3: Recursive

def deleteDuplicates(head):

if not head or not head.next:

return head

if head.val != head.next.val:

head.next = deleteDuplicates(head.next)

return head

else:

# Skip all duplicates

while head.next and head.val == head.next.val:

head = head.next

return deleteDuplicates(head.next)

Complexity: Time O(n), Space O(n) for recursion stack

4. Code Deep Dive

Optimal Solution (Two-Pointer):

def deleteDuplicates(head):

# Sentinel node handles head deletion edge case

dummy = ListNode(0, head)

# prev points to last distinct node

prev = dummy

current = head

while current:

# Detect duplicate sequence

if current.next and current.val == current.next.val:

# Skip all nodes with duplicate values

while current.next and current.val == current.next.val:

current = current.next

# Connect prev to node after duplicate sequence

prev.next = current.next

else:

# Current is distinct, move prev forward

prev = prev.next

# Always move current forward

current = current.next

return dummy.next

Line-by-Line Analysis:

- Line 2:

dummy = ListNode(0, head)- Sentinel node ensures we never lose head reference - Line 5-6:

prev, current = dummy, head- Two pointers: prev trails behind current - Line 9:

if current.next and current.val == current.next.val:- Check for duplicate sequence start - Line 11:

while current.next and current.val == current.next.val:- Skip all consecutive duplicates - Line 13:

prev.next = current.next- Bypass entire duplicate sequence - Line 16:

prev = prev.next- Only advance prev when current is unique - Line 19:

current = current.next- Always advance current

Edge Case Handling:

- Example 1 [1,2,3,3,4,4,5]:

- Head ‘1’ is unique → prev moves

- ‘2’ unique → prev moves

- ‘3’ duplicate → skip both 3s

- ‘4’ duplicate → skip both 4s

- ‘5’ unique → keep

- Example 2 [1,1,1,2,3]:

- Head ‘1’ duplicate → skip all 1s immediately

- ‘2’ and ‘3’ both unique → keep

5. Complexity War Room

Hardware-Aware Analysis:

- With n ≤ 300, entire list fits in L1 cache (~32KB)

- Pointer chasing is cache-unfriendly but negligible at this scale

- Algorithm uses 3 pointers + sentinel = 4 nodes overhead

Industry Comparison Table:

| Approach | Time | Space | Readability | Interview Viability |

|---|---|---|---|---|

| Brute Force | O(n) | O(k) | 8/10 | ✅ Good fallback |

| Two-Pointer | O(n) | O(1) | 7/10 | ✅ Optimal choice |

| Recursive | O(n) | O(n) | 9/10 | ❌ Stack overflow risk |

6. Pro Mode Extras

Variants Section:

- Keep One Duplicate (LC 83): Simpler - just skip consecutive duplicates

- Unsorted List: Requires hash map O(n) space

- K-Duplicates Allowed: Generalize skip condition

- Circular List: Detect cycle and handle wrap-around duplicates

Interview Cheat Sheet:

- First Mention: “I’ll use O(n) time, O(1) space two-pointer approach”

- Key Insight: “Sentinel node elegantly handles head deletion”

- Testing Strategy:

- All duplicates

- No duplicates

- Mixed head/tail duplicates

- Single node list

- Optimization Path: “Start with frequency counting, then optimize to single-pass”