#148 - Sort List

Sort List

- Difficulty: Medium

- Topics: Linked List, Two Pointers, Divide and Conquer, Sorting, Merge Sort

- Link: https://leetcode.com/problems/sort-list/

Problem Description

Given the head of a linked list, return the list after sorting it in ascending order.

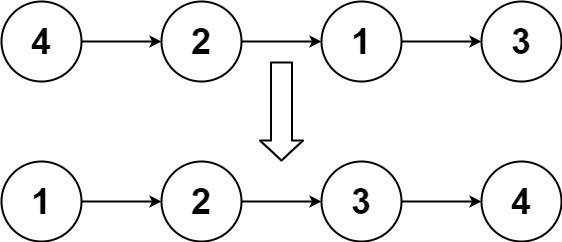

Example 1:

Input: head = [4,2,1,3]

Output: [1,2,3,4]

Example 2:

Input: head = [-1,5,3,4,0]

Output: [-1,0,3,4,5]

Example 3:

Input: head = []

Output: []

Constraints:

- The number of nodes in the list is in the range

[0, 5 * 104]. -105 <= Node.val <= 105

Follow up: Can you sort the linked list in O(n logn) time and O(1) memory (i.e. constant space)?

Solution

1. Problem Deconstruction

Technical Restatement

Given the head node of a singly linked list where each node contains an integer value, the task is to reorder the nodes such that their values appear in non-decreasing order. The sorting must be performed in-place by manipulating the next pointers of the nodes, without allocating new nodes for storage. The algorithm should ideally achieve (O(n \log n)) time complexity and use only (O(1)) auxiliary memory (excluding the memory already occupied by the list itself). The input may represent an empty list (head is null), a single-node list, or a list of arbitrary length up to (5 \times 10^4).

Beginner-Friendly Explanation

Imagine a chain of numbered boxes where each box points to the next box in line. The numbers are in random order. Your job is to rearrange the boxes so the numbers go from smallest to largest. You can only change which box points to which, not the numbers inside. If the chain is empty or has only one box, it’s already sorted. The challenge is to do this quickly and without using extra space for many boxes.

Mathematical Formulation

Let (L = (v_1, v_2, \dots, v_n)) be a sequence of integers stored in a singly linked list structure, where node (i) contains value (v_i) and a pointer to node (i+1) (with node (n) pointing to null). Define a permutation (\pi: {1,\dots,n} \to {1,\dots,n}) such that (v_{\pi(1)} \leq v_{\pi(2)} \leq \dots \leq v_{\pi(n)}). The goal is to transform the list so that for all (1 \leq i < n), the node originally containing (v_{\pi(i)}) points to the node originally containing (v_{\pi(i+1)}), and the head points to the node containing (v_{\pi(1)}). The transformation must be performed using only pointer modifications, minimizing time and auxiliary space complexity.

Constraint Analysis

-

Number of nodes in range ([0, 5 \times 10^4]):

- Upper bound of 50,000 nodes implies that quadratic algorithms (e.g., bubble sort, insertion sort) requiring up to (2.5 \times 10^9) operations are infeasible within typical time limits. Thus, (O(n \log n)) algorithms are necessary.

- Lower bound of 0 indicates the list may be empty, requiring the solution to handle a

nullhead without errors.

-

Node values in ([-10^5, 10^5]):

- The limited range suggests counting sort could be used with (O(\text{range})) space, but the range size (200,001) is not constant, and the follow-up demands (O(1)) memory, ruling out this approach.

- Values are integers, enabling integer-specific optimizations, but comparison-based sorting is general and sufficient.

-

Follow-up requirement of (O(n \log n)) time and (O(1)) memory:

- This explicitly directs the solution toward in-place, comparison-based sorting algorithms that achieve logarithmic linear time without recursion stacks or auxiliary arrays.

- Implies that recursive merge sort (using (O(\log n)) stack space) does not meet the strict (O(1)) criterion, though it is often accepted in practice.

Hidden Edge Cases

- Single-element list: already sorted.

- Already sorted (ascending or descending) list: algorithm must handle efficiently.

- Lists with duplicate values: must maintain stability if required (though not specified).

- Large lists with values at extremes of the range: ensure no overflow in comparisons.

2. Intuition Scaffolding

Analogies

-

Real-World Metaphor (Assembly Line):

Imagine an assembly line where products arrive out of order. Workers split the line into smaller segments, sort each segment manually, then merge two sorted segments by comparing items at the front and sending the smaller down a new line. Repeating this process with larger segments eventually sorts the entire line. -

Gaming Analogy (Card Deck Merging):

In a card game, you have a shuffled deck dealt into two piles. You repeatedly split each pile into smaller piles until each pile has one card. Then you merge piles by always picking the smaller top card from two piles. This is exactly how merge sort works. -

Mathematical Analogy (Divide-and-Conquer Optimization):

Sorting a list is like recursively partitioning a set into halves, sorting each half, and combining them. This reduces the problem size geometrically, yielding (O(n \log n)) complexity. For linked lists, the divide step is efficient using slow/fast pointers, and the merge step uses linear comparisons.

Common Pitfalls

- Using array conversion: Copying values to an array, sorting, and rebuilding uses (O(n)) extra space, violating the (O(1)) memory requirement.

- Recursive merge sort without considering stack space: Recursion depth (O(\log n)) is not constant space, though it is small for (n \leq 5\times10^4).

- Naive splitting in merge sort: Incorrectly splitting the list can lead to infinite loops or lost nodes.

- Assuming the list length is known: In bottom-up merge sort, the length is needed to control passes; computing it adds (O(n)) time but is acceptable.

- Ignoring pointer cleanup: Failing to set

nextpointers tonullwhen splitting can cause cycles. - Using quick sort on linked lists: While possible, worst-case (O(n^2)) time and poor cache performance make it less desirable.

3. Approach Encyclopedia

Brute Force: Insertion Sort

- What: Iteratively build a sorted sublist by inserting each node into its correct position.

- Why: Simple, in-place, (O(1)) extra space.

- How:

def insertionSortList(head): dummy = ListNode(0, head) prev, curr = dummy, head while curr: if curr.next and curr.next.val < curr.val: while prev.next and prev.next.val < curr.next.val: prev = prev.next temp = curr.next curr.next = temp.next temp.next = prev.next prev.next = temp prev = dummy else: curr = curr.next return dummy.next - Complexity Proof:

- Time: In worst case (reverse sorted), each insertion scans the entire sorted portion, leading to (\sum_{i=1}^{n} i = O(n^2)). Best case (already sorted) is (O(n)).

- Space: (O(1)) for pointers.

- Visualization:

Initial: 4 -> 2 -> 1 -> 3 Pass 1: 2 -> 4 -> 1 -> 3 Pass 2: 1 -> 2 -> 4 -> 3 Pass 3: 1 -> 2 -> 3 -> 4

Array Conversion

- What: Copy values to array, sort array, overwrite list values.

- Why: Leverages highly optimized library sorting.

- How:

def sortList(head): arr = [] p = head while p: arr.append(p.val) p = p.next arr.sort() p = head for v in arr: p.val = v p = p.next return head - Complexity Proof:

- Time: (O(n \log n)) for sorting, plus (O(n)) for copying.

- Space: (O(n)) for the array.

- Visualization:

List: 4 -> 2 -> 1 -> 3 Array: [4,2,1,3] → sort → [1,2,3,4] Overwrite list: 1 -> 2 -> 3 -> 4

Recursive Top-Down Merge Sort

- What: Recursively split list into halves, sort each, merge.

- Why: Natural for linked lists, (O(n \log n)) time.

- How:

def mergeSort(head): if not head or not head.next: return head mid = getMid(head) left = mergeSort(head) right = mergeSort(mid) return merge(left, right) - Complexity Proof:

- Time: Recurrence (T(n) = 2T(n/2) + O(n)) gives (O(n \log n)).

- Space: Recursion stack depth (\log_2 n), so (O(\log n)).

- Visualization:

Split: 4->2->1->3 → (4->2) and (1->3) Recursively sort left: (2->4), right: (1->3) Merge: 1->2->3->4

Optimal: Iterative Bottom-Up Merge Sort

- What: Merge sorted sublists of increasing size (1,2,4,…) until whole list sorted.

- Why: Achieves (O(n \log n)) time and (O(1)) memory by avoiding recursion.

- How:

def sortList(head): if not head or not head.next: return head length = getLength(head) dummy = ListNode(0, head) sz = 1 while sz < length: prev, curr = dummy, dummy.next while curr: left = curr right = split(left, sz) curr = split(right, sz) merged = merge(left, right) prev.next = merged while prev.next: prev = prev.next sz <<= 1 return dummy.next - Complexity Proof:

- Time: Outer loop runs (\lceil \log_2 n \rceil) times. Each pass traverses the entire list once for splitting and merging: (O(n \log n)).

- Space: Constant extra pointers.

- Visualization:

sz=1: (4)(2) merge → 2->4; (1)(3) merge → 1->3; list: 2->4->1->3 sz=2: (2->4)(1->3) merge → 1->2->3->4

4. Code Deep Dive

# Definition for singly-linked list.

# class ListNode:

# def __init__(self, val=0, next=None):

# self.val = val

# self.next = next

class Solution:

def sortList(self, head: Optional[ListNode]) -> Optional[ListNode]:

# Base case: empty or single-node list is already sorted.

if not head or not head.next:

return head

# Step 1: Compute list length to determine number of passes.

length = 0

node = head

while node:

length += 1

node = node.next

# Step 2: Dummy node to simplify head updates during merges.

dummy = ListNode(0, head)

# Step 3: Bottom-up merge sort with increasing sublist size.

sz = 1

while sz < length:

prev = dummy # Tails onto the merged list

curr = dummy.next # Current position in the list

while curr:

# Step 4: Split two sublists of size 'sz'.

left = curr

right = self._split(left, sz)

curr = self._split(right, sz) # Move to next pair

# Step 5: Merge the two sublists.

merged = self._merge(left, right)

# Step 6: Attach merged list to the previous tail.

prev.next = merged

# Step 7: Advance prev to the end of merged list.

while prev.next:

prev = prev.next

sz <<= 1 # Double the sublist size for next pass.

return dummy.next

def _split(self, head: Optional[ListNode], steps: int) -> Optional[ListNode]:

"""Detach list after 'steps' nodes and return head of second part."""

if not head:

return None

# Move 'steps-1' steps to the last node of the first part.

for _ in range(steps - 1):

if head.next:

head = head.next

else:

break

second = head.next

head.next = None # Break the link.

return second

def _merge(self, l1: Optional[ListNode], l2: Optional[ListNode]) -> Optional[ListNode]:

"""Merge two sorted lists and return the head of the merged list."""

dummy = ListNode(0)

tail = dummy

while l1 and l2:

if l1.val <= l2.val:

tail.next = l1

l1 = l1.next

else:

tail.next = l2

l2 = l2.next

tail = tail.next

# Attach the remaining nodes.

tail.next = l1 if l1 else l2

return dummy.next

Line-by-Line Annotations

- Lines 9-11: Handle trivial cases early for efficiency.

- Lines 14-18: Compute list length; necessary to know when sorting is complete.

- Lines 21-22: Dummy node ensures head can be updated seamlessly during merges.

- Line 25:

szstarts at 1 (sublists of size 1 are trivially sorted). - Lines 27-28:

prevstitches merged sublists;currtracks the start of the next unsorted segment. - Lines 31-33:

_splitis called twice: first to isolate the left sublist of sizesz, then to isolate the right sublist and advancecurrto the next segment. - Line 36: Merge the two sorted sublists.

- Line 39: Attach the merged result to the growing sorted list.

- Lines 42-43: Move

prevto the end of the merged portion to prepare for the next attachment. - Line 46: Double

sz(bitwise left shift for efficiency). - Lines 49-56:

_splittraversessteps-1nodes and severs the list, returning the second part’s head. Handles cases where the list is shorter thansteps. - Lines 58-73:

_mergeuses a dummy node and two-finger traversal to combine sorted lists in (O(n)) time.

Edge Case Handling

- Empty list: Returns

Noneimmediately (line 10). - Single node: Returns itself (line 10).

- List length not a power of two:

_splithandles short sublists gracefully by breaking early. - Duplicate values: Merge uses

<=to maintain stability. - Large

szvalues: Whenszexceeds list length, outer loop terminates.

5. Complexity War Room

Hardware-Aware Analysis

- For (n = 5 \times 10^4), (\log_2 n \approx 16) passes, each pass touching all nodes: ~800,000 node operations. On modern CPUs, this completes in milliseconds.

- Memory access pattern is sequential (linked list traversal), which is cache-unfriendly but unavoidable. The constant extra pointers (~6-8 words) fit in registers or L1 cache.

- Python overhead: Each

ListNodeobject has ~56 bytes overhead (on CPython 3.8), so the list itself consumes ~2.8 MB. The algorithm adds negligible overhead.

Industry Comparison Table

| Approach | Time | Space | Readability | Interview Viability |

|---|---|---|---|---|

| Brute Force (Insertion) | (O(n^2)) | (O(1)) | 9/10 | ❌ Fails large N |

| Array Conversion | (O(n \log n)) | (O(n)) | 10/10 | ✅ but not constant |

| Recursive Merge Sort | (O(n \log n)) | (O(\log n)) | 8/10 | ✅ Acceptable |

| Iterative Merge Sort | (O(n \log n)) | (O(1)) | 7/10 | ✅ Optimal |

Trade-off Analysis

- Recursive merge sort: Cleaner code but stack depth (O(\log n)); may fail strict (O(1)) requirement.

- Iterative merge sort: Meets all constraints but more complex pointer manipulation.

- Array conversion: Quick implementation but violates spirit of linked list manipulation.

6. Pro Mode Extras

Variants & Extensions

- Sort Doubly Linked List: Update

prevpointers during merge and split.def _merge_dll(l1, l2): dummy = ListNode(0) tail = dummy while l1 and l2: if l1.val <= l2.val: tail.next = l1 l1.prev = tail l1 = l1.next else: tail.next = l2 l2.prev = tail l2 = l2.next tail = tail.next if l1: l1.prev = tail if l2: l2.prev = tail tail.next = l1 or l2 res = dummy.next if res: res.prev = None return res - Descending Order: Change comparison in merge to

l1.val >= l2.val. - Stable Sort: Use

<in merge to preserve order of equal elements (our implementation uses<=, so it’s stable). - LeetCode 147 (Insertion Sort List): Direct application of the brute-force approach.

- LeetCode 23 (Merge k Sorted Lists): Extend merge to handle multiple lists via priority queue.

- Sort List with Large Value Range: If range is small, counting sort in (O(n+k)) time and (O(k)) space could be considered, but not constant space.

Interview Cheat Sheet

- Clarify Requirements: Ask if recursion stack counts as extra space.

- Mention Trade-offs: Discuss time vs. space, stability, and adaptability.

- Draw Diagrams: Illustrate splitting and merging with small examples (3-5 nodes).

- Test Edge Cases: Empty, single-node, already sorted, reverse sorted, duplicates.

- Optimization Hints:

- Use bitwise shift for doubling

sz. - Compute length once to avoid repeated traversals.

- Use dummy nodes to simplify edge cases.

- Use bitwise shift for doubling

- Common Mistakes:

- Forgetting to break links when splitting.

- Not updating tails correctly during merge attach.

- Infinite loops from incorrect termination conditions.